💨 Jan 17-23rd: Depth Anything, AlphaGeometry, Replicate, WhisperSpeech, Nous-Hermes-2-Mixtral-8x7B-DPO, YALLM, PEFT, DeepSeek-MOE, 100,000 Genomes Project, Self-Rewarding Language Models, MAGNeT

+ God-Human-Animal-Machine, NeuralBeagle14-7B, AlphaFold psychedelics, Roboflow, SAM, YOLOv8, Skin Cancer Vaccine, AI and the Future of Work ...

💨 Jan 17-23rd: Depth Anything, AlphaGeometry: An Olympiad-level AI system for geometry (Google DeepMind), Is Open-Source AI Dangerous? (IEEE vs X), AI regulation (Joscha), God-Human-Animal-Machine (Meghan O'Gieblyn via Marginalia), Gen-AI: Artificial Intelligence and the Future of Work (IMF), Replicate, WhisperSpeech, Fine-tune a Mistral-7b model with Direct Preference Optimization (Maxime Labonne), Nous-Hermes-2-Mixtral-8x7B-DPO, YALLM, PEFT (Parameter-Efficient Fine-Tuning), NeuralBeagle14-7B, AlphaCodium → Flow Engineering, DeepSeek-MOE, 100,000 Genomes Project, MAGNeT, Self-Rewarding Language Models, Skin Cancer vaccine clinical trials (mRNA-4157 + Keytruda), AlphaFold found thousands of possible psychedelics, Roboflow, SAM, YOLOv8

🏓 Observations: Is Open-Source AI Dangerous? (IEEE vs X), AI regulation (Joscha), God, Human, Animal, Machine (Meghan O'Gieblyn via Marginalia), Gen-AI: Artificial Intelligence and the Future of Work (IMF)

✭David "JoelKatz" Schwartz on X: "Only wealthy and privileged people should have access to computer tools that can perform unbiased analyses. Everyone else should have access to tools purged of anything the elites judge to be wrongthink… → @martin_casado Jan 14: "Sad to see @IEEESpectrum with a big fuck you to open source." ✭ Open-Source AI Is Uniquely Dangerous - IEEE Spectrum “But the regulations that could rein it in would benefit all of AI”

✭ Joscha Bach on X: "From the annals of AI regulation" ✭ Robin Hanson on X: ""Englishman William Lee, who invented an early mechanized knitter that would allow people to make clothes in a fraction of the normal time. In 1589, he finally secured an audience with Queen Elizabeth I with the hopes of being issued a patent. Sadly, his hopes were crushed, because Elizabeth feared that the machine would destabilize the realm by throwing too many people out of work. Attempts to acquire a patent in France and from Elizabeth’s successor James I were also refused on the same grounds."

✭God, Human, Animal, Machine: Consciousness and Our Search for Meaning in the Age of Artificial Intelligence – The Marginalian “To lose the appetite for meaning we call thinking and cease to ask unanswerable questions,” Hannah Arendt wrote in her exquisite reckoning with the life of the mind, would be to “lose not only the ability to produce those thought-things that we call works of art but also the capacity to ask all the answerable questions upon which every civilization is founded.” ~ I have returned to this sentiment again and again in facing the haunting sense that we are living through the fall of a civilization — a civilization that has reduced every askable question to an algorithmically answerable datum and has dispensed with the unasked, with those regions of the mysterious where our basic experiences of enchantment, connection, and belonging come alive. A century and half after the Victorian visionary Samuel Butler prophesied the rise of a new “mechanical kingdom” to which we will become subservient, we are living with artificial intelligences making daily decisions for us, from the routes we take to the music we hear. And yet the very fact that the age of near-sentient algorithms has left us all the more famished for meaning may be our best hope for saving what is most human and alive in us. ~ So intimates Meghan O’Gieblyn in God, Human, Animal, Machine: Technology, Metaphor, and the Search for Meaning.” ✭ God, Human, Animal, Machine by Meghan O'Gieblyn: 9780525562719 | PenguinRandomHouse.com: Books “For most of human history the world was a magical and enchanted place ruled by forces beyond our understanding. The rise of science and Descartes’s division of mind from world made materialism our ruling paradigm, in the process asking whether our own consciousness—i.e., souls—might be illusions. Now the inexorable rise of technology, with artificial intelligences that surpass our comprehension and control, and the spread of digital metaphors for self-understanding, the core questions of existence—identity, knowledge, the very nature and purpose of life itself—urgently require rethinking.” ~ ”To discover truth, it is necessary to work within the metaphors of our own time, which are for the most part technological. Today artificial intelligence and information technologies have absorbed many of the questions that were once taken up by theologians and philosophers: the mind’s relationship to the body, the question of free will, the possibility of immortality. These are old problems, and although they now appear in different guises and go by different names, they persist in conversations about digital technologies much like those dead metaphors that still lurk in the syntax of contemporary speech. All the eternal questions have become engineering problems.”

✭ AI Will Transform the Global Economy. Let’s Make Sure It Benefits Humanity. | IMF “In advanced economies, about 60 percent of jobs may be impacted by AI. Roughly half the exposed jobs may benefit from AI integration, enhancing productivity. For the other half, AI applications may execute key tasks currently performed by humans, which could lower labor demand, leading to lower wages and reduced hiring. In the most extreme cases, some of these jobs may disappear. ~ In emerging markets and low-income countries, by contrast, AI exposure is expected to be 40 percent and 26 percent, respectively. These findings suggest emerging market and developing economies face fewer immediate disruptions from AI. At the same time, many of these countries don’t have the infrastructure or skilled workforces to harness the benefits of AI, raising the risk that over time the technology could worsen inequality among nations.” ✭ Gen-AI: Artificial Intelligence and the Future of Work | IMF

🛠️ Tech: Replicate, WhisperSpeech, Fine-tune a Mistral-7b model with Direct Preference Optimization (Maxime Labonne), Nous-Hermes-2-Mixtral-8x7B-DPO, YALLM, PEFT (Parameter-Efficient Fine-Tuning), NeuralBeagle14-7B, MAGNET

✭ Replicate “Run AI with an API. ~ Run and fine-tune open-source models. Deploy custom models at scale. All with one line of code.~ Thousands of models contributed by our community ± All the latest open-source models are on Replicate. They’re not just demos — they all actually work and have production-ready APIs. ± AI shouldn’t be locked up inside academic papers and demos. Make it real by pushing it to Replicate.”

✭ GitHub - collabora/WhisperSpeech: An Open Source text-to-speech system built by inverting Whisper. “An Open Source text-to-speech system built by inverting Whisper.”

✭Fine-tune a Mistral-7b model with Direct Preference Optimization | by Maxime Labonne | Jan, 2024 | Towards Data Science “Pre-trained Large Language Models (LLMs) can only perform next-token prediction, making them unable to answer questions. This is why these base models are then fine-tuned on pairs of instructions and answers to act as helpful assistants. However, this process can still be flawed: fine-tuned LLMs can be biased, toxic, harmful, etc. This is where Reinforcement Learning from Human Feedback (RLHF) comes into play. ~ RLHF provides different answers to the LLM, which are ranked according to a desired behavior (helpfulness, toxicity, etc.). The model learns to output the best answer among these candidates, hence mimicking the behavior we want to instill. Often seen as a way to censor models, this process has recently become popular for improving performance, as shown in neural-chat-7b-v3–1. ~ In this article, we will create NeuralHermes-2.5, by fine-tuning OpenHermes-2.5 using a RLHF-like technique: Direct Preference Optimization (DPO). For this purpose, we will introduce a preference dataset, describe how the DPO algorithm works, and apply it to our model. We’ll see that it significantly improves the performance of the base model on the Open LLM Leaderboard.”

✭NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO · Hugging Face “Nous Hermes 2 Mixtral 8x7B DPO is the new flagship Nous Research model trained over the Mixtral 8x7B MoE LLM. ~ The model was trained on over 1,000,000 entries of primarily GPT-4 generated data, as well as other high quality data from open datasets across the AI landscape, achieving state of the art performance on a variety of tasks.”

✭Yet Another LLM Leaderboard - a Hugging Face Space by mlabonne “Leaderboard made with 🧐 LLM AutoEval using Nous benchmark suite.”

✭ PEFT “🤗 PEFT (Parameter-Efficient Fine-Tuning) is a library for efficiently adapting large pretrained models to various downstream applications without fine-tuning all of a model’s parameters because it is prohibitively costly. PEFT methods only fine-tune a small number of (extra) model parameters - significantly decreasing computational and storage costs - while yielding performance comparable to a fully fine-tuned model. This makes it more accessible to train and store large language models (LLMs) on consumer hardware.”

✭ Meta Launches MAGNeT, An Open-Source Text-to-Audio Model for On-the-Go Music Creation “the non-autoregressive design predicts masked token spans simultaneously, accelerating the generation process and simplifying the model by employing a single-stage transformer for both the encoder and decoder.” ✭ audiocraft/docs/MAGNET.md at main · facebookresearch/audiocraft · GitHub “AudioCraft provides the code and models for MAGNeT, Masked Audio Generation using a Single Non-Autoregressive Transformer MAGNeT is a text-to-music and text-to-sound model capable of generating high-quality audio samples conditioned on text descriptions. It is a masked generative non-autoregressive Transformer trained over a 32kHz EnCodec tokenizer with 4 codebooks sampled at 50 Hz. Unlike prior work on masked generative audio Transformers, such as SoundStorm and VampNet, MAGNeT doesn't require semantic token conditioning, model cascading or audio prompting, and employs a full text-to-audio using a single non-autoregressive Transformer. Check out our sample page or test the available demo! We use 16K hours of licensed music to train MAGNeT. Specifically, we rely on an internal dataset of 10K high-quality music tracks, and on the ShutterStock and Pond5 music data.”

✭Bindu Reddy on X: "Superhuman AI Agents Require Superhuman Feedback! Fundamentally, AI models are limited by their teachers. Today, their teachers happen to be humans. “TLDR: The only way to surpass human performance in future LLMs is to have LLMs train themselves and get better with each iteration.”

🔎 Research: AlphaGeometry: An Olympiad-level AI system for geometry (Google DeepMind), AlphaCodium → Flow Engineering, DeepSeek-MOE, 100,000 Genomes Project, Skin Cancer vaccine clinical trials (mRNA-4157 + Keytruda), Self-Rewarding Language Models, AlphaFold found thousands of possible psychedelics

✭AlphaGeometry: An Olympiad-level AI system for geometry - Google DeepMind “AlphaGeometry, an AI system that solves complex geometry problems at a level approaching a human Olympiad gold-medalist - a breakthrough in AI performance. In a benchmarking test of 30 Olympiad geometry problems, AlphaGeometry solved 25 within the standard Olympiad time limit. For comparison, the previous state-of-the-art system solved 10 of these geometry problems, and the average human gold medalist solved 25.9 problems. ~ ... by developing a method to generate a vast pool of synthetic training data - 100 million unique examples - we can train AlphaGeometry without any human demonstrations, sidestepping the data bottleneck. ~ ... We are open-sourcing the AlphaGeometry code and model, and hope that together with other tools and approaches in synthetic data generation and training, it helps open up new possibilities across mathematics, science, and AI. ~ ... AlphaGeometry is a neuro-symbolic system made up of a neural language model and a symbolic deduction engine, which work together to find proofs for complex geometry theorems. Akin to the idea of “thinking, fast and slow”, one system provides fast, “intuitive” ideas, and the other, more deliberate, rational decision-making.” ✭ Solving olympiad geometry without human demonstrations | Nature “Proving mathematical theorems at the olympiad level represents a notable milestone in human-level automated reasoning owing to their reputed difficulty among the world’s best talents in pre-university mathematics. … On a test set of 30 latest olympiad-level problems, AlphaGeometry solves 25, outperforming the previous best method that only solves ten problems and approaching the performance of an average International Mathematical Olympiad (IMO) gold medallist. Notably, AlphaGeometry produces human-readable proofs, solves all geometry problems in the IMO 2000 and 2015 under human expert evaluation and discovers a generalized version of a translated IMO theorem in 2004.” ✭ GitHub - google-deepmind/alphageometry

✭ GitHub - Codium-ai/AlphaCodium “a new approach to code generation by LLMs, which we call AlphaCodium - a test-based, multi-stage, code-oriented iterative flow, that improves the performances of LLMs on code problems. ~ We tested AlphaCodium on a challenging code generation dataset called CodeContests, which includes competitive programming problems from platforms such as Codeforces. The proposed flow consistently and significantly improves results. On the validation set, for example, GPT-4 accuracy (pass@5) increased from 19% with a single well-designed direct prompt to 44% with the AlphaCodium flow. ~ Many of the principles and best practices we acquired in this work, we believe, are broadly applicable to general code generation tasks.”

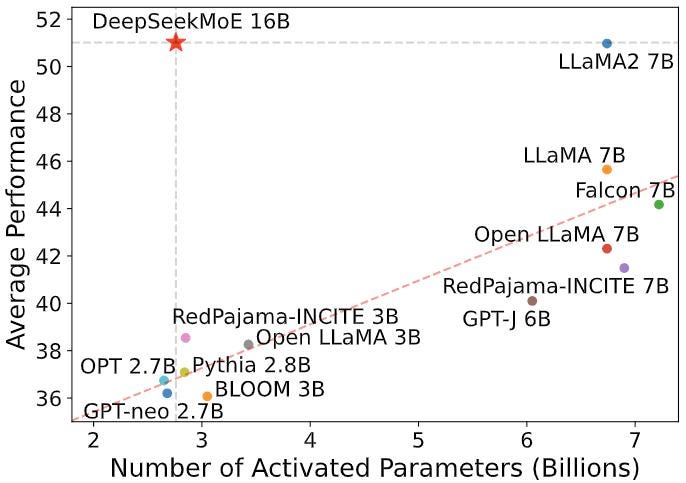

✭GitHub - deepseek-ai/DeepSeek-MoE “DeepSeekMoE 16B is a Mixture-of-Experts (MoE) language model with 16.4B parameters. It employs an innovative MoE architecture, which involves two principal strategies: fine-grained expert segmentation and shared experts isolation. It is trained from scratch on 2T English and Chinese tokens, and exhibits comparable performance with DeekSeek 7B and LLaMA2 7B, with only about 40% of computations. For research purposes, we release the model checkpoints of DeepSeekMoE 16B Base and DeepSeekMoE 16B Chat to the public, which can be deployed on a single GPU with 40GB of memory without the need for quantization.” ✭Shahul Es on X: "The DeepSeek paper has made a significant breakthrough in Mixture of Experts (MoEs) models. 1/n https://t.co/YUYVo9E7Vq" / X

✭ Integrating Genomic and Clinical Data from the 100,000 Genomes Cancer Program Paves the Way for Precision Oncology - CBIRT “In order to establish standardized high-throughput whole-genome sequencing (WGS) for patients with cancer and rare diseases, the UK Government launched the groundbreaking 100,000 Genomes Project within the National Health Service (NHS) in England. This was accomplished through the use of an automated bioinformatics pipeline accredited by the International Organisation for Standardisation. Operating in conjunction with NHS England, Genomics England examined WGS data from 13,880 solid tumors representing 33 different cancer types, fusing genetic information with actual treatment and outcome data in a secure research environment. WGS and longitudinal life course clinical data were linked, enabling the evaluation of treatment results for patients categorized by pangenomic markers. The results of this study show how useful it is to connect genomic and practical clinical data in order to do survival analysis, locate cancer genes that influence prognosis, and deepen our knowledge of how cancer genomics affects patient outcomes.”

✭Moderna And Merck Announce mRNA-4157 (V940) In Combination with Keytruda(R) (Pembrolizumab) Demonstrated Continued Improvement in Recurrence-Free Survival and Distant Metastasis-Free Survival in Patients with High-Risk Stage III/IV Melanoma Following Comp “mRNA-4157 (V940) is a novel investigational messenger RNA (mRNA)-based individualized neoantigen therapy (INT) consisting of a synthetic mRNA coding for up to 34 neoantigens that is designed and produced based on the unique mutational signature of the DNA sequence of the patient's tumor. Upon administration into the body, the algorithmically derived and RNA-encoded neoantigen sequences are endogenously translated and undergo natural cellular antigen processing and presentation, a key step in adaptive immunity. ~ Individualized neoantigen therapies are designed to train and activate an antitumor immune response by generating specific T-cell responses based on the unique mutational signature of a patient's tumor. KEYTRUDA is an immunotherapy that works by increasing the ability of the body's immune system to help detect and fight tumor cells. As previously announced from the Phase 2b KEYNOTE-942/mRNA-4157-P201 trial evaluating patients with high-risk stage III/IV melanoma, combining mRNA-4157 (V940) with KEYTRUDA may provide a meaningful benefit over KEYTRUDA alone.”

✭ [2401.10020] Self-Rewarding Language Models “We posit that to achieve superhuman agents, future models require superhuman feedback in order to provide an adequate training signal. Current approaches commonly train reward models from human preferences, which may then be bottlenecked by human performance level, and secondly these separate frozen reward models cannot then learn to improve during LLM training. In this work, we study Self-Rewarding Language Models, where the language model itself is used via LLM-as-a-Judge prompting to provide its own rewards during training. We show that during Iterative DPO training that not only does instruction following ability improve, but also the ability to provide high-quality rewards to itself. Fine-tuning Llama 2 70B on three iterations of our approach yields a model that outperforms many existing systems on the AlpacaEval 2.0 leaderboard, including Claude 2, Gemini Pro, and GPT-4 0613. While only a preliminary study, this work opens the door to the possibility of models that can continually improve in both axes.”

✭AlphaFold found thousands of possible psychedelics. Will its predictions help drug discovery? | Nature “Researchers have doubted how useful the AI protein-structure tool will be in discovering medicines — now they are learning how to deploy it effectively.” ✭ AlphaFold2 structures template ligand discovery | bioRxiv “AlphaFold2 (AF2) and RosettaFold have greatly expanded the number of structures available for structure-based ligand discovery, even though retrospective studies have cast doubt on their direct usefulness for that goal. Here, we tested unrefined AF2 models prospectively, comparing experimental hit-rates and affinities from large library docking against AF2 models vs the same screens targeting experimental structures of the same receptors. … Intriguingly, against the 5-HT2A receptor the most potent, subtype-selective agonists were discovered via docking against the AF2 model, not the experimental structure. To understand this from a molecular perspective, a cryoEM structure was determined for one of the more potent and selective ligands to emerge from docking against the AF2 model of the 5-HT2A receptor. Our findings suggest that AF2 models may sample conformations that are relevant for ligand discovery, much extending the domain of applicability of structure-based ligand discovery.”

✭[2401.10891] Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data “This work presents Depth Anything, a highly practical solution for robust monocular depth estimation. Without pursuing novel technical modules, we aim to build a simple yet powerful foundation model dealing with any images under any circumstances. To this end, we scale up the dataset by designing a data engine to collect and automatically annotate large-scale unlabeled data (~62M), which significantly enlarges the data coverage and thus is able to reduce the generalization error. We investigate two simple yet effective strategies that make data scaling-up promising. First, a more challenging optimization target is created by leveraging data augmentation tools. It compels the model to actively seek extra visual knowledge and acquire robust representations. Second, an auxiliary supervision is developed to enforce the model to inherit rich semantic priors from pre-trained encoders. We evaluate its zero-shot capabilities extensively, including six public datasets and randomly captured photos. It demonstrates impressive generalization ability. Further, through fine-tuning it with metric depth information from NYUv2 and KITTI, new SOTAs are set. Our better depth model also results in a better depth-conditioned ControlNet. Our models are released at

https://depth-anything.github.io/

👀Watching: CursorConnect,

Alpha Everywhere: AlphaGeometry, AlphaCodium and the Future of LLMs

Trieu Trinh author of AlphaGeometry

🖲️AI Art-Research:

✭KREA AI on X: "announcing three new features. text-to-image, background removal, and eraser. try them now for free! → demo

✭ Alie Jules on X: "Revisiting one-word prompts with v 6.

👁️🗨️Attempting to Learn a bit about: Roboflow, SAM, YOLOv8

Roboflow: Accelerate Image Annotation with SAM and Grounding DINO | Python Tutorial

✭ Home - Ultralytics YOLOv8 Docs “Introducing Ultralytics YOLOv8, the latest version of the acclaimed real-time object detection and image segmentation model. YOLOv8 is built on cutting-edge advancements in deep learning and computer vision, offering unparalleled performance in terms of speed and accuracy. Its streamlined design makes it suitable for various applications and easily adaptable to different hardware platforms, from edge devices to cloud APIs.”

Episode 15 | Ultralytics YOLO Quickstart Guide [baby steps]

YOLO VISION 2023 | Upgrade Any camera with Ultralytics YOLOv8 in a No-Code Way [corporate context]