Hallucinations are (almost) all you Need [video release]

An AI-generated audio-visual artistic-lecture about AI advances.

Fundamental research in science is being transformed by hallucinations; the arts are being transfigured by research; and if simulations are integral to cognition, then AI may already emulate a 'self'.

Synopsis

This rapid artistic overview of key scientific AI examples (that covers a year loosely defined as starting with the release of GPT-4 on March 14th, 2023) is framed by the hypothesis that fundamental research in science is being transformed by a practice predominantly associated with the arts: namely hallucinations. Inversely, the arts are being transfigured by a practice predominantly associated with science, namely research. It’s also a theory about the incipient subjectivity of AI and how that relates to aesthetics. And it's told entirely with generated AI audio-visuals and voices, including a cloned voice of the author.

*

Disclaimer: Hallucinations in people are conventionally associated with mental illness, drugs, and/or genius. And hallucinations in AI (mostly in large language models) have been critiqued as net-negatives: contributing to disinformation, bias, post-truth, deep-fakes, collapse of democracy, copyright theft, etc…

Intention: While cognizant of the multiple potential existential risks of AI (economic perils, bias, disinformation, deepfakes, autonomous weapons, bio-hacks, ...) In this age of reactive anxiety the following is an attempt to energize the ideal of optimal flourishing for all sentient entities.

*

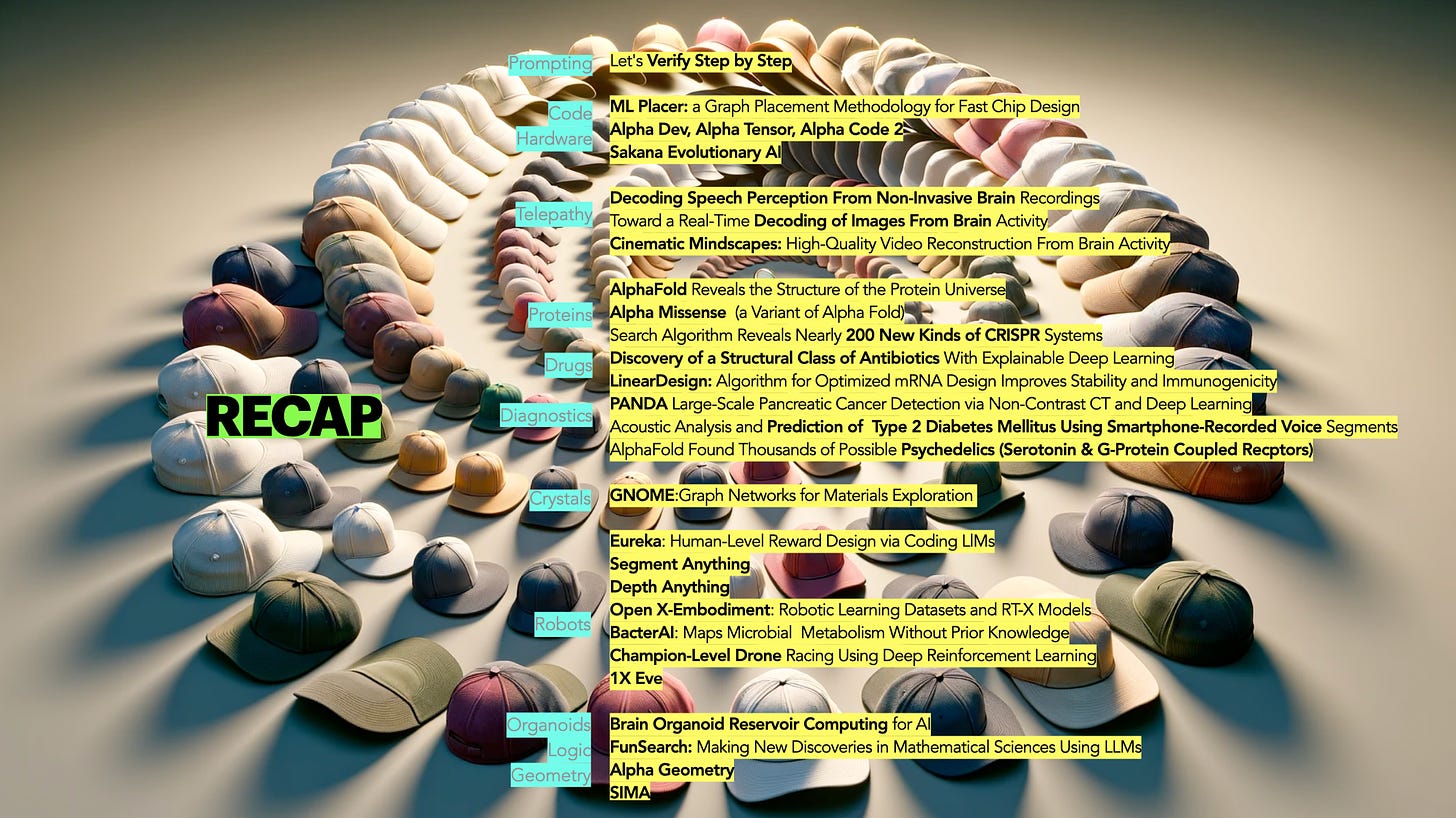

Tech Claim: AI hallucinations (of proteins/crystals/algorithms/circuits etc) pruned down to the feasible, are contributing to a revolutionary acceleration of scientific discoveries. Numeric-algorithmic optimizations, AI hardware accelerators, reward mechanism design, non-invasive brain sensors, drug discovery, sustainable deep-tech materials, autonomous lab robotics, neuromorphic organoid computing, and mathematical reasoning.

Speculative Identity Claim: Using citations from Katherine Hayles, Donna Haraway, Michael Levin, Bud Craig, Thomas Metzinger, Hallucinations are (almost) all you Need advances the speculative notion that if mind is everywhere and simulations underlie cognition, then current AI may already internally emulate incipient self-identity formations.

In both art and science, hallucinations are almost enough: without the pruning down to the plausible, there is just a sprawl of potentiality.

Segue (a brief bridge from Sutskever to SORA & SUNO) (4m36s)

#genAI (quick review of a few releases 2023 - mid 2024) (5m14s)

Conclusions (16m52)

Acknowledgements

And of course, everything will remain much the same,

until collectively humanity recognizes that hallucinations are what we are, as is everything else.

This work was created by David Jhave Johnston as a postdoc at the University of Bergen's Center for Digital Narrative. I am grateful to co-directors Scott Rettberg and Jill Walker-Rettberg, and many members of the CDN, for encouragement, insights, and feedback.

This research is partially supported by the Research Council of Norway Centres of Excellence program, project number 332643, Center for Digital Narrative and project number 335129, Extending Digital Narrative.

A huge thank you to the generous gifted swift talented intelligence of Drew Keller (CDN Resident & Microsoft Senior Content Developer) for final video edits, sound balancing, and fundamental structural analytical advice as the project developed. The project improved immeasurably due to his narrative guidance and technical expertise.

Tech

Videos generated primarily using Haiper

Speculation section generated using Pika.

Audio generated using Suno

Speculation section generated using Riffusion.

Voiceover generated using ElevenLabs.

Upscaling: TopazLabs.

For video + full essay, citations, and soundtrack, see: http://glia.ca/2024/Hallucinations/